Author: seamus

Maha Bali, as from her blog, Reflecting Allowed

Maha Beli is a professor of practice of the American University in Cairo. In her talk, Bali touches on something that I have repeatedly run into during my experiences with this course, which is the idea of “bias-free” technology. In essence, it is the idea that you can fix complicated problems of economic and political inequality by imposing the same requirements upon all participants. One example she gives is that of Wikipedia, the open collaboration encyclopedia, which she says engages in economic justice by being free and political justice by being free by anyone to edit, but it often fails at cultural justice. Because people have to agree on what to add or remove, what is present or absent is often reliant on the prevailing views on the relevant topic.

She also touches on how these same practices still occur even when absent of any actual people. Predictive text programs like ChatGPT and other generative AI software is also victim to these prevailing views. Whether the medium being discussed is an algorithm devoid of human touch, a internet open-sourced encyclopedia, or even a in-person organization of people discussing modern issues. Once again it always comes down to the simple fact of the overall political systems that influence and damage our ability to comprehensively view and analyze modern biases.

Beli, Maha, Reflecting Allowed Maha Beli’s blog about education, https://blog.mahabali.me/

Rogue State, by William Blum

William Blum is an American journalist who has spent his career covering the actions of military intervention carried out by the US military and CIA. His book Rogue State, released in 2000, covers the many methods of the United States employs in combating mainly third-world political movements. In Rogue Empire, he also covers the methods of mass digital surveillance carried out internationally by American intelligence agencies, especially the NSA. Which, obviously, is a terribly useful resource for me in this project.

It was a simple coincidence which brought this book to me, I actually took it out from the UVic library several weeks ago. I picked the book up after reading Blum’s other book Killing Hope, an excellent book, but not one which specifically covers the US’s methods of digital surveillance. I am aware of how lucky it is to simply have such a good resource on hand during my work on this project.

A quote of his that I thought relevant.

Blum, William (2000). Rogue State: A Guide to the World’s Only Superpower, Common Courage Press.

Two excellent resources I have made available for me are that of the interviews and presentations of Chris Gilliard and Ian Linkletter. Ian Linkletter is an Emerging Technology and Open Education Librarian at the British Columbia Institute of Technology. He is also a public rights activist and has been raising awareness for Slapp lawsuits ever since he was sued by a monitoring software company. Chris Gilliard is the co-director of the Critical Internet Studies Institute, he also specializes in research in ‘luxury surveillance,’ and has a book of the same name coming out this year.

Ian Linkletter

Chris Gilliard spoke in his podcast-style talk on the growth of digital surveillance, both online and off-line. One of the concepts that he spoke of was “luxury surveillance,” where high-class and expensive technology, like apple watches and smart cars, act as a high-tech security cameras that constantly watch you. It is somewhat sinister how large companies spend millions of dollars on mass psychology operations to convince people that their products, that they make to sell to you for money, are the height of necessity and your possession of them reflects well on your social standing. Especially when these products are then used to track and monitor those who use them, and now with the new push to wear some kind of necklace or pin that you are always wearing and that is always listening.

Ian Linkletter generally specializes in the many ways in which digital surveillance is used to engage in unethical behavior in monitoring students. Digital proctoring is the act of using software when a student is engaging in cheating behavior when doing schoolwork online. Digital proctoring software observes you while you are engaging in schoolwork by observing your head movements and checking if you are still in frame. He covered how this digital proctoring software is often more active against students who are neurodivergent or disabled, as the actions that are defined as being ‘suspicious’ as they are more likely to fit the vague descriptions that the programs are compliant to. The digital proctoring software that scans a computer’s web-screen to check if a student’s face is present has also been known to discriminate, this time against people with darker complexions. Its advocates may claim that the software is ‘colorblind,’ and in one sense it quite literally is, but this does not change the fact that these unthinking programs are still pliant to the previously existing biases of those who made it, like how was pointed out in my blog of Nodin Cutfeet’s talk.

The talk with Nodin Cutfeet went over digital literacy and how it applies to indigenous peoples. One of the most interesting points that was brought up early in the talk was how generative AIs like chatgpt have a negative effect on indigenous communities and cultures. They spoke on how genAIs give inaccurate information and perspectives on indigenous cultures and seem to homogenize them, and give a poor view, even if asked about a specific people they tend to blend several cultures together. It brought to mind to me how even if new technologies and perspectives claim to be ‘colorblind’ or unbiased they often take the prevailing views that existed before, and in doing so, reinforce them.

Nodin Cutfeet specializes in workshops in underserved communities to teach digital literacy. The Waniskâw Foundation, founded by Cutfeet, is an organization which sets these workshops. They host workshops that teach digital coding and art classes. A very interesting part of the talk was the difficulty that they encountered in hosting workshops in communities that are underfunded and lack resources. They mentioned how many of their students lack a conventional computer, often using Xboxes and other game consoles for their web browsing features. It shows how a good teacher isn’t one who demands specific equipment of their students but rather is one who accommodates for their students’ specific situations and what they have on hand.

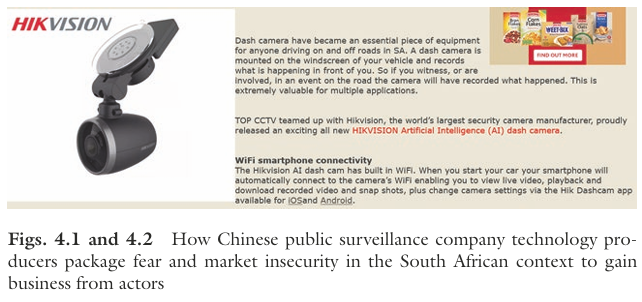

The first steps I took in researching my deeper dive topic with the rest of my pod was to search the UVIC library. The first resource that caught my eye was Digital Surveillance in Southern Africa: Polices, Politics and Practices by Allen Munoriyarwa and Admire Mare. The book covers the many methods of public and digital surveillance and the political justifications involved in establishing and maintaining it. The book’s preface states that surveillance is becoming increasingly common in the world and South Africa is becoming an especially bad case due to the lack of transparency and regulations, and the politicized nature of surveillance in South Africa.

Munoriyarwa and Mare detail the variety of methods used by the South African government and corporations to push for a greater surveillance methods. They explain how public surveillance companies promote fear in order to get their customers’ business:

Overall, the book is a good resource covering the general methods and the coercive political environment that makes this kind of wide-scale surveillance possible. An excellent resource, and not just for its ability to inform in the situation in South Africa, since it gives a picture of the extreme lengths that can be reached when wide-ranging surveillance is normalized.

Munoriyarwa, A., & Mare, A. (2022). Digital Surveillance in Southern Africa : Policies, Politics and Practices (1st ed. 2022.). Springer International Publishing. https://doi.org/10.1007/978-3-031-16636-5

Another topic that I have had very little knowledge that was highly interesting was that of the topic of social annotation. Annotation is the act of creating a note of information or context on a piece of text or diagram. Kalir’s body of work is most interested in the possibility of creating additional annotations, especially from former readers including information to better understand the information.

He brings up how articles and journals sometimes have text highlighted with extra information contained in them. I agree that annotation can bring up another face to writing, with extra information giving greater context to what is already included. He suggests that social annotation would allow a reader’s peers to give additional insights and observations to a text, and potentially greater value to course material.

Recently, I checked out and read several books from the UViC library. Several of these books carried sometimes very extensive marking in pencil and pen, mainly highlighting text that was of interest to a previous reader. I often found myself wondering what was of great enough interest to highlight in the words and what thoughts they would have in the text.

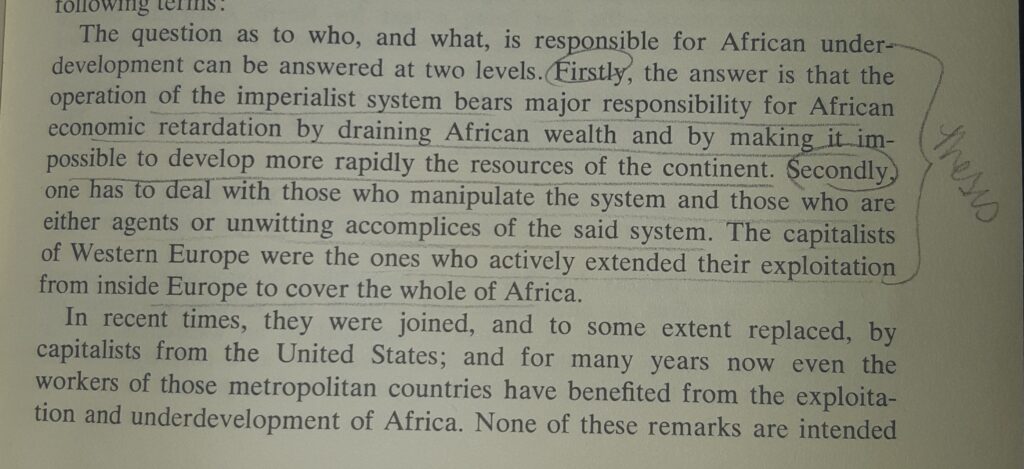

How Europe Underdeveloped Africa by Walter Rodney

I watched the fireside chat with Lucas Wright and came across a new term, “tool agnostic.” I looked it up and it means to be comfortable with using any tool at your disposal and not favoring any one tool overwhelmingly more than any other (especially within an IT context). I feel that this term is especially relevant when talking about AI. An agnostic is neither believer nor atheist and as AI has a tendency to take up a large amount of space in the public imaginings it is important to remember that at end of the day it is still simply a tool. As a tool it has several useful features and Lucas Wright displayed some his favorites. He showed how you can use GPTs made for custom purposes, the primary one that he showed as example was made to write responses to emails, as seen below.

The GPT that Wright showcased in the talk was capable of giving a description of the email and then writing an email based on your input:

Uses like this are, perhaps, less provocative than the many hypothetical uses and dangers of AI that have proposed over the years, but I feel that in it of itself is an important lesson. It is important to not place special emphasis on any new tool when it is not deserving of such. AI especially, partially due to the years of “hyping up” by popular media, has receive wide media attention in its development and this make it easy to believe that it is either very very good or very very bad, in reality its going to be a bit of both, but at least emails will be easier to write.

I also saw the talk with Dr. Mariel Miller on the drawbacks and potential learning advantages of AI. And there are potential advantages to using it (remember to be tool agnostic, as previously defined), as long as you have a well-thought strategy when implementing AI into your learning process. If you fail to account for how to use generative AI in your learning you may end up harming yourself and reducing your own credibility. Dr. Miller went over how you can use generative AI to summarize key findings in a report, make bullet points, and even improve your own writing grammatically. You can also use generative AI to sum up information if you have difficulty with comprehension (although this may be inadvisable given the sometimes unreliable quality of AI writing).

As someone who quite often consumes media made by online content creators, most of the interactions that I had with copyright was as a sudden strike against someone for using copyrighted music for a few seconds too long, or simply offering a critique of a piece of media and having some angry creator seek retribution. This talk helped me to remember that copyright is not simply a tool for Disney to occasionally give some people a good slap (although this does happen) but also a real tool for the protection of intellectual property.

One interesting thing that was brought up by Inba Kehoe in the talk is that anything that I create is protected by copyright law. This is honestly something that I had never thought about, it can be important to sometimes receive a sharp tap on the head and be reminded of your own ability to exercise autonomy in one’s own intellectual rights. Another aspect of copyright that I did not give much thought to was how it can give reasons to exercise respect towards your colleagues.

I think that we should all remember that the works that we create have real protections associated with them, and that these protections do not just apply to the products of large corporations. We can control how our works are used and it is not outside of our power to decide how the products of our intellectual labour are used.

We are currently living in a world of rapid and continuous technological development and arguably one of the most substantial changes to the modern world is that of information. In this course there are students from a wide variety of different majors and programs, my own program is biochemistry. Now, biochemistry might not seem to be fully relevant to the subject of digital media literacy, but I would argue that media literacy is still a crucial topic for me. In a world that has accelerated the ease of access for mounds of information it is exceptionally important to understand, create, and evaluate modern media ethically.

Last month, I managed to catch the interview with the creator of SIFT technologies, Mike Caulfield. The content of much of his authorship, and of his interview, was concerned with the topic of online misinformation, more specifically how to spot it and avoid incorporating it into any of your writing or academic endeavors. His talk not only included how to spot misinformation online but also some history on how people perceived the internet would change things when it was first ‘coming onto the scene,’ so to speak. I found the content of the talk reassuring. Sure, there is plenty of misinformation online, and many of the sources you may find on the internet may not be entirely reliable, but you can still sift fact from fiction if you know how to examine a source critically.

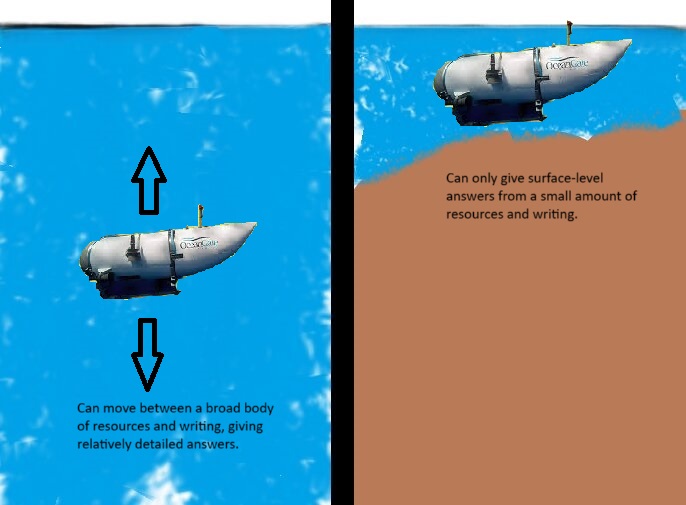

One of the sections of the talk that I found most interesting was Mike speaking on artificial-intelligence tools currently being developed. I expected him to dismiss AI as an ineffective tool for research, and to be fair he did express that it had limitations (you shouldn’t use it to write a blog post, for example), but he said that it was possible to use AI ethically. He said that you coul use software like ChatGPT to give a decent overview of topics that already had a broad pool of research, but was less useful for subjects that a shallow body of knowledge. He made the interesting metaphor of a submarine moving through deep water when a broad swath of knowledge is accessible as opposed to the shallows, artist’s renditions available below.

Overall, the talk gave me a better understanding of misinformation online. I would say some of the most useful material advice was to pay close attention to any sources that you use, and understand where a claim comes from originally. He also gave several insightful clarifications on AI, both its uses and limitations. I would say that I came from the talk feeling optimistic about my place in the digital landscape.